Running ChatGPT alternative locally and offline

Concerned about data privacy with ChatGPT? Introducing Ollama, a secure, efficient solution for local language model deployment.

Intro

When considering the use of ChatGPT, it’s important to approach it with caution, especially when dealing with sensitive or confidential information. To address this concern, I set out to find a more secure option that allows me to perform operations on my own machine, eliminating the need to send data to external servers.

After exploring various possibilities, I discovered a promising solution: Ollama. This application offers a user-friendly way to deploy language models without complicated setup procedures. A simple command is all it takes to get started, making the process efficient and straightforward without the need for complex configurations.

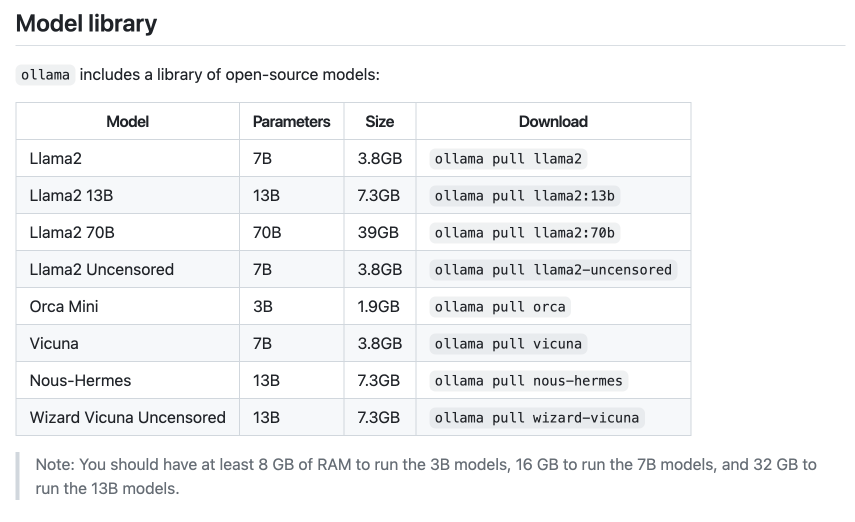

Beyond its ease of use, Ollama offers more than just simplicity. It supports a variety of models, including Facebook’s Llama2, even an uncensored version. This versatility makes Ollama a powerful tool in the field of language models.

On a technical note, my current workstation is an Intel Macbook Pro, featuring a 2.4 GHz 8-Core Intel Core i9 processor, an AMD Radeon Pro 5500M with 8 GB of dedicated memory, Intel UHD Graphics 630 with 1536 MB of video memory, and 32 GB of 2667 MHz DDR4 RAM. Unfortunately, I’ve noticed that Ollama doesn’t perform optimally on this setup. However, anecdotal reports from online sources suggest that Ollama performs exceptionally well on Mac machines powered by M1 and M2 chips.

To sum up, while ChatGPT has its strengths, it’s crucial to exercise caution when dealing with sensitive data. Ollama offers a sensible alternative, allowing you to run language models locally. With its easy setup, compatibility with various models, and potential for better performance on specific hardware, Ollama emerges as a practical choice.

Start using it by following these simple steps:

- Download and install Ollama.

- Launch Ollama and follow the on-screen instructions.

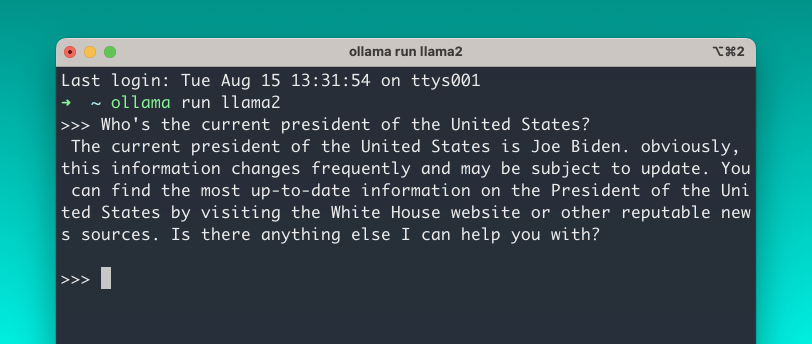

- Open your terminal and execute the command “ollama run llama2”. Please note that the initial execution might take some time as it will be downloading the model from the internet, which is ~3.8GB.

- After the download is finished, you can begin interacting with the language model in the same way you would with ChatGPT.

Explore further through these resources:

- Olama’s GitHub page

- Ollama: The Easiest Way to Run Uncensored Llama 2 on a Mac

- Run Meta’s Llama2 on Your Mac with Ollama

I’m excited to hear what you think about the topic! Let’s connect and chat on Twitter @mahdif.

Spread the love ♥️ – If you liked the article, please share it on Twitter and other social networks. Your support means the world to me!